Artificial Intelligence (AI) is increasingly built into technologies that we use in our everyday lives, and while it seems benign to use AI to delete an unwanted object from the background of a photo or draft a contract, the stakes are much higher when AI is brought into the field of medical imaging and the health of patients is impacted. To address this, several major radiological institutions from around the world came together to publish Developing, Purchasing, Implementing and Monitoring AI Tools in Radiology: Practical Considerations. A Multi-Society Statement From the ACR, CAR, ESR, RANZCR & RSNA.

On Monday, January 22nd, this joint statement was published in the Canadian Association of Radiologists Journal (CARJ), Insights into Imaging, the Journal of Medical Imaging and Radiation Oncology, the Journal of the American College of Radiology, and Radiology: Artificial Intelligence. The statement was coordinated by a number of important international medical bodies: the CAR, the American College of Radiology (ACR), the European Society of Radiology (ESR), the Radiological Society of North America (RSNA), and the Royal Australian and New Zealand College of Radiologists (RANZCR).

CAR member and co-author Dr. An Tang (Université de Montréal, CHUM) says the idea to publish the statement as a multi-society project came about during an international conference last year.

“The idea arose at the 2023 European Congress of Radiology (ECR) in Vienna when Dr. Adrian Brady, then Chair of the European Society of Radiology (ESR) Quality, Safety & Standards (QSS) Committee, proposed to write a multi-society paper on the adoption of AI in radiology,” Dr. Tang recalled. “Because of the nature of software, which can be written once and deployed everywhere across countries and jurisdictions, we felt that it was preferable to release jointly through several radiology societies across the world. Previous white papers and multi-society statements already covered ethical issues. The time was ripe for a paper on the adoption of AI tools.”

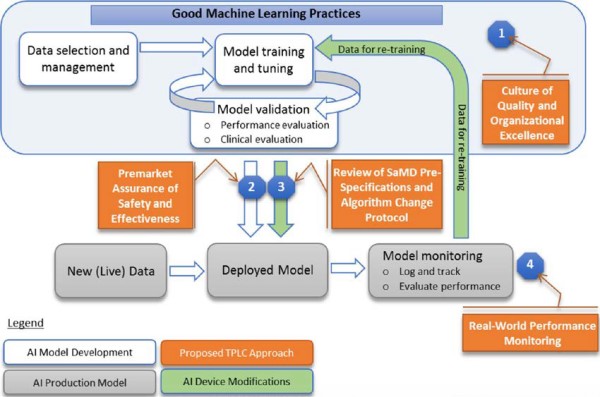

The statement sets out to define the “potential practical problems and ethical issues surrounding the incorporation of AI into radiological practice.” New AI driven technologies are different from the computer-aided detection (CADe) and diagnosis (CADx) systems that have been commonplace in radiology for decades. “Modern AI algorithms, particularly those based on deep learning, fundamentally differ from traditional CAD by automatically learning relevant features from data without explicit definition and programming,” the statement points out. “Deep learning algorithms can learn to identify patterns in radiological images by being trained on large datasets and, in principle, can continuously learn and improve their performance as they are exposed to more data.”

The potential practical problems posed by AI require the need to evaluate its efficacy in new ways before clinical implementation. “Oversight and validation of AI software prior to widespread clinical adoption are critical for patient safety. We need to be able to ensure that models work at the ‘bedside’ as they were intended to work in the pre-market publications,” said Dr. Tang. “This cautious oversight is required to ensure trust, not only at the time of purchase and installation, but also during clinical usage through continuous post‐market surveillance.”

Dr. Jaron Chong (Western University) is a co-author of the statement and is Chair of the CAR’s Standing Committee on AI. He notes that the data environment in which AI software operates is critical to its functioning.

“It's important to understand that these AI models are not operating in a static clinical environment and are often part of a complex interoperable network of ever changing hardware and software, many times in environments and with inputs not initially anticipated or evaluated by AI vendors,” he said. “Professional oversight is the best mechanism we have to capture the benefits of these technologies while reducing the risks and harms.”

Moving forward, Dr. Tang notes that the technological affordances of AI make it important to continue collaborating with international societies by consistently evaluating software across different populations and clinical settings.

“International cooperation is necessary to ensure that models still perform well with different populations and imaging equipment from different manufacturers, scanners, acquisition parameters, and post-processing,” he explained. “The performance of models can drift over time and international cooperation constitutes an additional mechanism to ensure long-term stability and safety of AI software.”